We will share openly the firmware code, board design, manufacturing process, API spec, and all necessary sources. In this project, we honor developer’s will and work on the standardization.

Chirimen, a Firefox OS-Powered IoT Single-Board Computer Developed by Mozilla

SolarCity Will Eliminate Over 550 Job Due to State’s Anti-Rooftop Solar Decision

The PUC’s decision to change the rules to punish existing solar customers after the state encouraged them to go solar with rebates is particularly callous and leaves Nevadans to question whether the state would ever place the financial security of regular citizens above the financial interests of NV Energy.

“I contacted Governor Sandoval multiple times after the ruling because I am convinced that he and the PUC didn’t fully understand the consequences of this decision, not only on the thousands of local jobs distributed solar has created, but on the 17,000 Nevadans that installed solar with the state’s encouragement,” said Lyndon Rive, SolarCity’s CEO. “I’m still waiting to speak to the Governor but I am convinced that once he and the Commissioners understand the real impact, that they will do the right thing.”

SolarCity announced on December 23 that as a result of the PUC decision, it had to cease solar sales and installation in the state effective immediately. Other Nevada solar companies with higher cost structures than SolarCity are expected to collectively lay off thousands of additional Nevadans in the coming months.

“Telling employees they can no longer work for SolarCity is the hardest thing we’ve ever done,” continued Rive. “These are hard-working Nevadans and a single government action has put them out of work. This is not how government is supposed to work.”

SolarCity has also closed a training center in West Las Vegas that it opened a little over a month ago. The November press release announcing its opening contained this statement from Governor Sandoval: “I’m proud to celebrate the opening of SolarCity’s new training center, which will make Nevada the regional hub for training workers in the jobs of the 21st century. Our homegrown solar industry has already created over 6,000 good Nevada jobs, and has tremendous potential to continue driving innovation, economic diversification, and opportunity in the Silver State.”

Fortunately, there are other voices speaking up for solar employees and customers. The Nevada Bureau of Consumer Protection is attempting to protect Nevadans by filing a motion to halt implementation of the PUC’s ruling, stating that the order’s impact “is not consistent with the Governor’s stated objectives of SB 374 or the Governor’s initiatives and focus to increase jobs and employment forNevada residents.”

Just weeks after Congress voted with bipartisan support to extend the federal tax credit for solar, Governor Sandoval’s Commission is moving the state backwards. The Governor’s and Commission’s support for a de facto ban on rooftop solar defies public opinion, including the opinion of the members of his own party. According to a recent poll by Moore Information, 73% of registered NevadaRepublicans support the state’s previous rooftop solar rules.

About Press Release

Twitter •

Custom Search

How to C in 2016

This is a draft I wrote in early 2015 and never got around to publishing. Here's the mostly unpolished version because it wasn't doing anybody any good sitting in my drafts folder. The simplest change was updating year 2015 to 2016 at publication time.

Feel free to submit fixes/improvements/complaints as necessary. -Matt

The first rule of C is don't write C if you can avoid it.

If you must write in C, you should follow modern rules.

C has been around since the early 1970s. People have "learned C" at various points during its evolution, but knowledge usually get stuck after learning, so everybody has a different set of things they believe about C based on the year(s) they first started learning.

It's important to not remain stuck in your "things I learned in the 80s/90s" mindset of C development.

This page assumes you are on a modern platform conforming to modern standards and you have no excessive legacy compatability requirements. We shouldn't be globally tied to ancient standards just because some companies refuse to upgrade 20 year old systems.

Preflight

Standard c99.

- clang, default

- C99 is the default C implementation for clang, no extra options needed.

- If you want C11, you need to specify

-std=c11 - clang compiles your source files faster than gcc

- gcc requires you sepecify

-std=c99or-std=c11- gcc builds source files slower than clang, but sometimes generates faster code. Performance comparisons and regression testings are important.

Optimizations

- -O2, -O3

- -Os

-Oshelps if your concern is cache efficiency (which it should be)

Warnings

-Wall -pedantic- during testing you should add

-Werrorand-Wshadowon all your platforms- it can be tricky deploying production source using

-Werrorbecause different platforms and compilers and libraries can emit different warnings. You probably don't want to kill a user's entire build just because their version of GCC on a platform you've never seen complains in new and wonderous ways.

- it can be tricky deploying production source using

- you can be extra fancy and add

-Wstrict-aliasing -Wstrict-overflowtoo. - as of now, Clang reports some valid syntax as a warning, so you should add

-Wno-missing-field-initializers- GCC fixed this unnecessary warning after GCC 4.7.0

Buliding

- Compilation units

- The most common way of building C projects is to decompose every source file into an object file then link all the objects together at the end. This procedure works great for incremental development, but it is suboptimal for performance and optimization. Your compiler can't detect potential optimization across file boundaries this way.

- The most common way of building C projects is to decompose every source file into an object file then link all the objects together at the end. This procedure works great for incremental development, but it is suboptimal for performance and optimization. Your compiler can't detect potential optimization across file boundaries this way.

- LTO — Link Time Optimization

- LTO fixes the "source analysis and optimization across compilation units problem" by annotating object files with intermediate representation so source-aware optimizations can be carried out across compilation units at link time (this slows down the linking process noticeably, but

make -jhelps). - clang LTO (guide)

- gcc LTO

- As of 2016, clang and gcc releases support LTO by just adding

-fltoto your command line options during object compilation and final library/program linking. LTOstil needs some babysitting though. Sometimes, if your program has code not used directly but used by additional libraries, LTO can evict functions or code because it detects, globally when linking, some code is unused/unreachable and doesn't need to be included in the final linked result.

- LTO fixes the "source analysis and optimization across compilation units problem" by annotating object files with intermediate representation so source-aware optimizations can be carried out across compilation units at link time (this slows down the linking process noticeably, but

Arch

-march=native- give the compiler permission to use your CPU's full feature set

- again, performance testing and regression testing is important (then comparing the results across multiple compilers and/or compiler versions) is important to make sure any enabled optimizations don't have adverse side effects.

-msse2and-msse4.2may be useful if you need to target not-your-build-machine features.

Writing code

Types

If you find yourself typing char or int or short or long or unsigned into new code, you're doing it wrong.

For modern programs, you should #import <stdint.h> then use standard types.

The common standard types are:

int8_t,int16_t,int32_t,int64_t— signed integersuint8_t,uint16_t,uint32_t,uint64_t— unsigned integersfloat— standard 32-bit floating pointdouble- standard 64-bit floating point

Notice we don't have char anymore. char is actually misnamed and misused in C.

Developers routinely abuse char to mean "byte" even when they are doing unsigned byte manipulations. It's much cleaner to use uint8_t to mean single a unsigned-byte/octet-value and uint8_t * to mean sequence-of-unsigned-byte/octet-values.

One Exception to never-char

The only acceptable use of char in 2016 is if a pre-existing API requires char (e.g. strncat, printf'ing "%s", ...) or if you're initializing a read-only string (e.g. const char *hello = "hello";) because the C type of string literals ("hello") is char *.

ALSO: In C11 we have native unicode support, and the type of UTF-8 string literals is still char * even for multibyte sequences like const char *abcgrr = u8"abc😬";.

Signedness

At no point should you be typing the word unsigned into your code. We can now write code without the ugly C convention of multi-word types that impair readability as well as usage. Who wants to type unsigned long long int when you can type uint64_t? The <stdint.h> types are more explicit, more exact in meaning, convey intentions better, and are more compact for typographic usage and readability.

But, you may say, "I need to cast pointers to long for dirty pointer math!"

You may say that. But you are wrong.

The correct type for pointer math is uintptr_t defined in <stddef.h>.

Instead of:

long diff = (long)ptrOld - (long)ptrNew;Use:

ptrdiff_t diff = (uintptr_t)ptrOld - (uintptr_t)ptrNew;System-Dependent Types

You continue arguing, "on a 32 bit patform I want 32 bit longs and on a 64 bit platform I want 64 bit longs!"

If we skip over the line of thinking where you are deliberatly introducing difficult to reason about code by using two different sizes depending on platform, you still don't want to use long for system-dependent types.

In these situations, you should use intptr_t— the integer type defined to be the word size of your current platform.

On 32-bit platforms, intptr_t is int32_t.

On 64-bit platforms, intptr_t is int64_t.

intptr_t also comes in a uintptr_t flavor.

For holding pointer offsets, we have the aptly named ptrdiff_t which is the proper type for storing values of subtracted pointers.

Maximum Value Holders

Do you need an integer type capable of holding any integer usable on your system?

People tend to use the largest known type in this case, such as casting smaller unsigned types to uint64_t, but there's a more technically correct way to guarantee any value can hold any other value.

The safest container for any integer is intmax_t (also uintmax_t). You can assign or cast any signed integer to intmax_t with no loss of precision, and you can assign or cast any unsigned integer to uintmax_t with no loss of precision.

That Other Type

The most widely used system-dependent type is size_t.

size_t is defined as "an integer capable of holding the largest array index" which also means it's capable of holding the largest memory offset in your program.

In practical use, size_t is the return type of sizeof operator.

In either case: size_t is practically defined to be the same as uintptr_t on all modern platforms, so on a 32-bit platform size_t is uint32_t and on a 64-bit platform size_t is uint64_t.

There is also ssize_t which is a signed size_t used as the return value from library functions that return -1 on error.

So, should you use size_t for arbitrary system-dependent sizes in your own function parameters? Technically, size_t is the return type of sizeof, so any functions accepting a size value representing a number of bytes is allowed to be a size_t.

Other uses include: size_t is the type of the argument to malloc, and ssize_t is the return type of read() and write().

Printing Types

You should never cast types during printing. You should use proper type specifiers.

These include, but are not limited to:

size_t-%zussize_t-%zdptrdiff_t-%td- raw pointer value -

%p(prints hex value; cast your pointer to(void *)first) - 64-bit types should be printed using

PRIu64(unsigned) andPRId64(signed)- on some platforms a 64-bit value is a

longand on others it's along long - it is actualy impossible to specify a correct cross-platform format string without these format macros because the types change out from under you (and remember, casting values before printing is not safe or logical).

- on some platforms a 64-bit value is a

intptr_t—"%" PRIdPTRuintptr_t—"%" PRIuPTRintmax_t—"%" PRIdMAXuintmax_t—"%" PRIuMAX

One note about the PRI* formatting specifiers: they are macros and the macros expand to proper printf type specifiers on a platform-specific basis. This means you can't do:

printf("Local number: %PRIdPTR\n\n", someIntPtr);but instead, because they are macros, you do:

printf("Local number: %" PRIdPTR "\n\n", someIntPtr);Notice you put the '%' inside your format string, but the type specifier is outside your format string.

C99 allows variable declarations anywhere

So, do NOT do this:

void test(uint8_t input) {

uint32_t b;

if (input > 3) {

return;

}

b = input;

}do THIS instead:

void test(uint8_t input) {

if (input > 3) {

return;

}

uint32_t b = input;

}Caveat: if you have tight loops, test the placement of your initializers. Sometimes scattered declarations can cause unexpected slowdowns. For regular non-fast-path code (which is most of everything in the world), it's best to be as clear as possible, and defininig types next to your initializations is a big readability improvement.

C99 allows for loops to declare counters inline

So, do NOT do this:

uint32_t i;

for (i = 0; i < 10; i++)Do THIS instead:

for (uint32_t i = 0; i < 10; i++)One exception: if you need to retain your counter value after the loop exists, obviously don't declare your counter scoped to the loop itself.

C allows static initialization of stack-allocated arrays

So, do NOT do this:

uint32_t numbers[64];

memset(numbers, 0, sizeof(numbers) * 64);Do THIS instead:

uint32_t numbers[64] = {0};C allows static initilization of stack-allocated structs

So, do NOT do this:

struct thing {

uint64_t index;

uint32_t counter;

};

struct thing localThing;

void initThing(void) {

memset(&localThing, 0, sizeof(localThing));

}Do THIS instead:

struct thing {

uint64_t index;

uint32_t counter;

};

struct thing localThing = {0};If you need to re-initialize already allocated structs, delcare a global zero-struct for later assignment:

struct thing {

uint64_t index;

uint32_t counter;

};

staticconststruct thing localThingNull = {0};

.

.

.

struct thing localThing = {.counter = 3};

.

.

.

localThingReusable = localThingNull;C99 allows variable length array initializsers

So, do NOT do this:

uintmax_t arrayLength = strtoumax(argv[1], NULL, 10);

void *array[];

array = malloc(sizeof(*array) * arrayLength);

/* remember to free(array) when you're done using it */Do THIS instead:

uintmax_t arrayLength = strtoumax(argv[1], NULL, 10);

void *array[arrayLength];

/* no need to free array */NOTE: You must be certain arrayLength is a reasonable size in this situation. (i.e. less than a few KB, sometime your stack will max out at 4 KB on weird platforms). You can't stack allocate huge arrays (millions of entries), but if you know you have a limited count, it's much easier to use C99 VLA capabilities rather than manually requesting heap memory from malloc.

C99 allows annotating non-overlapping pointer parameters

See the restrict keyword (often __restrict)

Parameter Types

If a function accepts arbitrary input data and a length to process, don't restrict the type of the parameter.

So, do NOT do this:

void processAddBytesOverflow(uint8_t *bytes, uint32_t len) {

for (uint32_t i = 0; i < len; i++) {

bytes[0] += bytes[i];

}

}Do THIS instead:

void processAddBytesOverflow(void *input, uint32_t len) {

uint8_t *bytes = input;

for (uint32_t i = 0; i < len; i++) {

bytes[0] += bytes[i];

}

}The input types to your functions describe the interface to your code, not what your code is doing with the parameters. The interface to the code above means "accept a byte array and a length", so you don't want to restrict your callers to only uint8_t byte streams. Maybe your users even want to pass in old-style char * values or something else unexpected.

By declaring your input type as void * and re-casting inside your function, you save the users of your function from having to think about abstractions inside your own library.

Return Parameter Types

C99 gives us the power of <stdbool.h> which defines true to 1 and false to 0.

For success/failure return values, functions should return true or false, not an int32_t return type with manually specifying 1 and 0 (or worse, 1 and -1 (or is it 0 success and 1 failure? or is it 0 success and -1 failure?)).

If a function mutates an input parameter to the extent the parameter is invalidated, instead of returning the altered pointer, your entire API should force double pointers as parameters anywhere an input can be invalidated. Coding with "for some calls, the return value invalidates the input" is too error prone for mass usage.

So, do NOT do this:

void *growthOptional(void *grow, size_t currentLen, size_t newLen) {

if (newLen > currentLen) {

void *newGrow = realloc(grow, newLen);

if (newGrow) {

/* resize success */

grow = newGrow;

} else {

/* resize failed, free existing and signal failure through NULL */

free(grow);

grow = NULL;

}

}

return grow;

}Do THIS instead:

/* Return value: * - 'true' if newLen < currentLen and attempted to grow * - 'true' does not signify success here, the success is still in '*_grow' * - 'false' if newLen >= currentLen */

bool growthOptional(void **_grow, size_t currentLen, size_t newLen) {

void *grow = *_grow;

if (newLen > currentLen) {

void *newGrow = realloc(grow, newLen);

if (newGrow) {

/* resize success */

*_grow = newGrow;

return true;

}

/* resize failure */

free(grow);

*_grow = NULL;

/* for this function, * 'true' doesn't mean success, it means 'attempted grow' */return true;

}

return false;

}Or, even better, Do THIS instead:

typedefenum growthResult {

GROWTH_RESULT_SUCCESS = 1,

GROWTH_RESULT_FAILURE_GROW_NOT_NECESSARY,

GROWTH_RESULT_FAILURE_ALLOCATION_FAILED

} growthResult;

growthResult growthOptional(void **_grow, size_t currentLen, size_t newLen) {

void *grow = *_grow;

if (newLen > currentLen) {

void *newGrow = realloc(grow, newLen);

if (newGrow) {

/* resize success */

*_grow = newGrow;

return GROWTH_RESULT_SUCCESS;

}

/* resize failure, don't remove data because we can signal error */return GROWTH_RESULT_FAILURE_ALLOCATION_FAILED;

}

return GROWTH_RESULT_FAILURE_GROW_NOT_NECESSARY;

}Formatting

Coding style is simultaenously very important and utterly worthless.

If your project has a 50 page coding style guideline, nobody will help you. But, if your code isn't readable, nobody will want to help you.

The solution here is to always use an automated code formatter.

The only usable C formatter as of 2016 is clang-format. clang-format has the best defaults of any automatic C formatter and is still actively developed.

Here's my preferred clang-format script:

#!/usr/bin/env bashclang-format -style="{BasedOnStyle: llvm, IndentWidth: 4, AllowShortFunctionsOnASingleLine: None, KeepEmptyLinesAtTheStartOfBlocks: false}"$@Then call it as:

matt@foo:~/repos/badcode% cleanup-format -i *.{c,h,cc,cpp,hpp,cxx}The -i option to clang-format means overwrite existing files with formatting changes instead of writing to new files or creating backup files.

If you have many files, you can recursively process an entire source tree in parallel:

#!/usr/bin/env bash# note: clang-tidy only accepts one file at a time, but we can run it# parallel against disjoint collections at once.find . \( -name \*.c -or -name \*.cpp -or -name \*.cc \)|xargs -n1 -P4 cleanup-tidy

# clang-format accepts multiple files during one run, but let's limit it to 12# here so we (hopefully) avoid excessive memory usage.find . \( -name \*.c -or -name \*.cpp -or -name \*.cc -or -name \*.h \)|xargs -n12 -P4 cleanup-format -iNow, there's a new cleanup-tidy script there. The contents of cleanup-tidy is:

#!/usr/bin/env bashclang-tidy \

-fix \

-fix-errors \

-header-filter=.* \

--checks=readability-braces-around-statements,misc-macro-parentheses \

$1\-- -I.clang-tidy is policy driven code refactoring tool. The options above enable two fixups:

readability-braces-around-statements— force allif/while/forstatement bodies to be enclosed in braces- It's an accident of history for C to have "brace optional" single statements after loop constructs and conditionals. It is inexcusable to write modern code without braces enforced on every loop and every conditional. Trying to argue "but, the compiler accepts it!" has nothing to do with the readabiltiy, maintainability, understandability, or skimability of code. You aren't programming to please your compiler, you are programming to please future people who have to maintain your current brain state years after everybody has forgotten why anything exists in the first place.

misc-macro-parentheses— automatically add parens around all parameters used in macro bodies

clang-tidy is great when it works, but for some complex code bases it can get stuck. Also, clang-tidy doesn't format, so you need to run clang-format after you tidy to align new braces and reflow macros.

Readability

the writing seems to start slowing down here...

logical self-contained portions of code file

File Structure

Try to limit files to a max of 1,000 lines (1,500 lines in really bad cases). If your tests are in-line with your source file (for testing static functions, etc), adjust as necessary.

misc thoughts

Never use malloc

You should always use calloc. There is no performance penalty for getting zero'd memory. If you don't like the function protype of calloc(object count, size per object) you can wrap it with #define mycalloc(N) calloc(1, N).

Never memset (if you can avoid it)

Never memset(ptr, 0, len) when you can statically initialize a structure (or array) to zero (or reset it back to zero by assigning from a global zero'd out structure).

Learn More

Also see Fixed width integer types (since C99)

Also see Apple's Making Code 64-Bit Clean

Also see the sizes of C types across architectures— unless you keep that entire table in your head for every line of code you write, you should use explicitly defined integer widths and never use char/short/int/long built-in storage types.

Also see size_t and ptrdiff_t

If you really want to write everything perfectly, memorize the thousand individual pages at Secure Coding.

Closing

Writing correct code at scale is essentially impossible. We have multiple operating systems, runtimes, libraries, and hardware platforms to worry about without even considering things like random bit flips in RAM or our block devices lying to us with unknown probability.

The best we can do is write simple, understandable code with as few indirections and as little undocumented magic as possible.

Lyft defies predictions by continuing to grow as a rival to Uber

It's Saturday night in San Francisco's Mission District. Two women stand outside a dive bar in the December chill, looking at their phones. Two Toyota Priuses pull up to the sidewalk.

"Yo, you Kelly?" a driver yells to the taller of the women.

"No," she says, glancing up from her phone.

"I'm Kelly!" the other woman calls out.

"Yo Kelly, I'm your Uber!"

The taller woman walks to the door of the other Prius.

"Are you my Lyft?"

"You Rachel?" the driver says.

"Yes!"

The women climb into their respective rides. The Priuses pull away.

SIGN UP for the free California Inc. business newsletter >>

A year ago, Uber dominated San Francisco. As in many cities, "Uber" had become a verb for ride-hailing apps. But today, you're as likely to spot a Lyft as an Uber in this city.

Those who thought on-demand transportation was a winner-take-all market — that Uber would crush competitors in ride-hailing the way Facebook crushed competitors in social media — are being forced to change their tune.

It turns out that the winner-take-all phenomenon that drives so much of the Internet — a theory also known as "network effects"— may not be as relevant to the transportation industry.

Last week a small, struggling ride-hailing company called Sidecar pulled out of the ride-hailing business. But Lyft's business continues to improve, and it remains an investment magnet. On Monday, the company announced a new $1-billion funding round, led by General Motors, which invested $500 million.

Since shedding its clownmobile front-grille mustache in favor of a more discreet windshield sticker a year ago, Lyft, ride-hailing's second-largest company, has captured 40% market share in San Francisco, and in newer markets, such as Austin, Texas, it nearly matches Uber with 45% market share. Lyft declined to reveal its market share in other markets, including Los Angeles.

------------

FOR THE RECORD

10:16 a.m. An earlier version of this story said Lyft’s international expansion is expected to start next year. It is starting in 2016.

------------

From January 2014 to January 2015, the company grew fivefold in rides and revenue. It's on track for $1 billion in gross revenue by October this year.

Which isn't to say Lyft is about to eclipse Uber.

Lyft is valued at $5.5 billion. Uber's most recent valuation is $62.5 billion. Lyft has 315,000 drivers. Uber has more than 400,000 in the U.S. alone. Uber is available in 68 countries. Lyft operates only in the U.S.; its international expansion into Asia through partnerships isn't due to begin until next year.

Despite the gulf in resources between the industry's number one and two, Lyft continues to grow.

"People thought that this was a winner-takes-all market, and I think everyone's realized that's not the case," said Lyft's co-founder and president, John Zimmer.

Pundits got it wrong, he believes, because winner takes all is such a common scenario in tech. In social media, Facebook came out on top because of the network effect: The more of your friends are on it, the better it gets. That naturally lends itself to one player.

See more of our top stories on Facebook >>

"But in a transportation business, specifically our business, there are very strong network effects, but only to a point," Zimmer said.

Initially, it was thought that whichever service had more drivers would also have more passengers; more drivers meant shorter wait times, more passengers meant more fares. A monopoly would emerge.

"But once you hit three minute pickup times, there's no benefit to having more people on the network," Zimmer said.

In fact, according to economist W. Brian Arthur, a prime theoretician behind network effects, if all services are equal, then network effects may not be so advantageous.

"For example, Uber might have a strong network advantage in an area, but if Lyft comes in and offers a much better product, it can dislodge a company, network effects and all," Arthur said.

"Network effects are a dynamic idea. They're not frozen in time. They do exist, but it doesn't mean someone can't come along and just leapfrog that."

Zimmer likes to compare the dynamic between Uber and Lyft to AT&T and Verizon. When both cell networks hit three bars of coverage, people start to see them as equivalent, and base their spending decisions on other factors, such as brand values and customer experience.

That's what Lyft has spent the last year doing: building the ride-hailing equivalent of cell towers to get pickup times down to three minutes or less.

With that out of the way, it's out to capture market share, by attracting passengers who want an alternative to Uber and tapping into the ocean of people who have never used a ride-hailing service.

For its international expansion, Lyft has partnered with incumbents in markets such as China, India and Southeast Asia, so when U.S Lyft customers open the app while overseas, they can hail a ride from local operators such as Didi Kuaidi (China) and Ola (India), and vice versa.

The company will tackle each geography differently.

But Lyft's domestic strategy interests big investors the most, with activist billionaire Carl Icahn investing $100 million in the San Francisco company, telling the New York Times in May: "There's room for two in this area."

Scott Weiss, a Lyft board member and partner at venture capital firm Andreessen Horowitz, echoed a similar sentiment, comparing the on-demand transportation market to airlines, with the potential for it to be even bigger.

"There are probably 10 to 12 airlines that have multibillion-dollar valuations, and every country has one or two flagship carriers," Weiss said. "We always bet the market is humongous."

However big we think the on-demand and autonomous transportation industry will be, investors say think bigger. The U.S. logistics and transportation industry totaled $1.33 trillion, or 8.5% of annual gross domestic product, in 2012, and it has only grown with the economy, according to the Department of Commerce.

Although it's unclear how much market share a company would need to be financially successful — Uber is, after all, only 5 years old; Lyft is 3 — there's a big chunk of the transportation pie up for grabs.

Which is why the on-demand companies are knuckling down now.

Uber has spent $1 billion in China and India to expand its business, and was the first on-demand transportation service in hundreds of U.S. cities. Leaked financial documents show Lyft spent $96.1 million on marketing in the first half of 2015, more than twice its net revenue in the same period.

Under the guidance of marketing chief Kira Wampler, the company underwent a brand redesign in early 2015, scrubbing clean traces of the fluffy mustache in favor of a sleeker, more sophisticated look. It covered San Francisco with billboards, bus shelter ads and posters plastered down the sides of buildings. The Lyft app recently underwent a complete redesign to offer users more transparency and predictability in its ride pricing.

And its message to potential customers? "We treat you better."

Although passengers anecdotally have had both great and nightmarish experiences with Lyft and Uber drivers, Lyft believes that because its platforms enable customers to tip drivers (Uber's app doesn't have such a feature), drivers are, in theory, incentivized to offer a better service.

When Lyft launched, it also encouraged passengers to ride in the front and to greet drivers with a fist bump. And though it no longer stipulates how passengers should ride, Wampler believes the culture fostered in the days of the fist bump has stuck around, resulting in a more social and fun experience.

According to Weiss, Lyft has cornered the millennial market, a kind of Southwest Airlines (fun, friendly and social) to Uber's Virgin America (more serious and luxurious).

Although Uber first positioned itself with its black town cars as a service for professionals and UberX as a service for everyone, Lyft has capitalized on a segment of the market that isn't satisfied with the Uber experience, said Hugh Tallents, partner at brand strategy firm CG42.

"Uber has in many ways created the category, and with that comes some serious frustrations that Lyft is looking to solve," Tallents said.

Lyft, for example, has a cap on its surge pricing, whereas Uber fares have been known to increase dramatically during busy periods, a frustration for many passengers. Lyft has also created the perception of better driver advocacy, with the ability for riders to tip, and by encouraging passengers to interact with the driver, Tallents said.

"It makes it a much more human interaction, and I think people have been craving that," he said.

If Lyft can capture 75% of the millennial crowd, it will be in a good position for the long term, even if Uber continues to dwarf it, Weiss said.

"This is a market that's going to float at these two ships," he said of Uber and Lyft. "But where Uber stands to lose is the millennial market. Lyft is too consistent. They service that market too well."

Twitter: @traceylien

ALSO

Uber sued by drivers excluded from class-action lawsuit

Faraday Future unveils Batmobile-like electric concept car at CES

CES kicks off with prediction that global tech sales will fall 2% this year

Don't be an architecture hipster

One of my more popular HN comments was a teardown of an all-too-common engineering subculture. The article in question sought to teach “Software Architecture,” but ultimately annoyed me and many other HN readers.

Tl; dr:

Software architecture discussions are so polluted by software-hipsters that the majority of software engineers are disinclined to discuss and study architecture at all.

- Probably studied philosophy, english, film-studies, or engineering.

- Lurks around discussions, hoping to overhear a mistake, any mistake.

- Uses an unnecessarily complex vocabulary

- Has a self-righteous and loud tone of voice, especially when correcting people.

- Enjoys correcting people, even on minor/irrelevant points.

- If asked him a question you can be sure of two things:

1) he will never admit he doesn’t know

2) he will answer with so many niche terms that you will end up more confused than when you began - He may be likened to Dwight Shrute, or the comic-book guy.

- Loves UML making diagrams. Gladly presents them without a legend to people who don’t know how to read UML diagrams.

- Love creating new unintuitive names for very simple ideas (e.g “Broker topology”). Proceeds to use these niche names in discussions and judges other people for not knowing what a “Broken Topology” is.

- Gets really into programming fads that are hailed as panaceas but later get tossed out for a new panacea (e.g. microservices, Layered Architecture, REST).

- Gets very emotionally attached to programming decisions, even if they will have no effect on the user.

- Loves engineering around unlikely “what ifs…”.

- Prefers creating abstraction through classes instead of functions, even if no state is needed.

- Is bored by “practical details” like logging, debugging, convenience, fail-safes, and delivering quickly.

- Maximizes the number and obscurity of patterns used, leading to classes named things like EventLoopListenerFactory.

- Only cares about performance if obscure (e.g. tail-call optimization)

- Isn’t actually very capable creating software (hasn’t won any coding competitions, has trouble with git reset, hasn’t written any software that people enjoy using)

Thus:

Know-it-all / hipster

= Architecture Hipster+ software architecture

I don’t dislike architecture. Architecture is a beautiful study. However, the complexity of the discipline makes an fertile ground for phonies; a space for phonies to use miscommunication as a tool to create an illusion of their own competence.

Show HN: I've made a tool to generate beautiful gradient background images

This tool helps you to generate beautiful blurry background images that you can use in any project. It doesn't use CSS3 gradients, but a rather unique approach. It takes a stock image, extracts a very small area (sample area) and scales it up to 100%. The browser's image smoothing algorithm takes care of the rest.

You can then use the image as an inline, base64 encoded image in any HTML element's background, just click Generate CSS button at the bottom of the app. Select source images from the gallery or use yours, the possibilities are endless.

Goodies

- Use your keyboard: arrow keys help to navigate precisely while the esc key hides the whole UI.

- Save HTTP requests, serve the background instantly, provide unique designs.

- Use your own images

- Share your design via URLs

Credits

This tool was dreamed, developed, directed, executive produced by Tibor Szász. The images used in the project are all public domain stock images. Sources can be found in the image titles. Technologies used: Semantic UI, Dat.GUI, SASS, ES6, Pen and paper.

Nvidia GPUs can break Chrome's incognito mode

When I launched Diablo III, I didn’t expect the pornography I had been looking at hours previously to be splashed on the screen. But that’s exactly what replaced the black loading screen. Like a scene from hollywood, the game temporarily froze as it launched, preventing any attempt to clear the screen. The game unfroze just before clearing the screen, and I was able to grab a screenshot (censored with bright red):

Even though this happened hours later, the contents of the incognito window were perfectly preserved.

So how did this happen? A bug in Nvidia’s GPU drivers. GPU memory is not erased before giving it to an application. This allows the contents of one application to leak into another. When the Chrome incognito window was closed, it’s framebuffer was added to the pool of free GPU memory, but it was not erased. When Diablo requested a framebuffer of it’s own, Nvidia offered up the one previously used by Chrome. Since it wasn’t erased, it still contained the previous contents. Since Diablo doesn’t clear the buffer itself (as it should), the old incognito window was put on the screen again.

In the interest of reproducing the bug, I wrote a program to scan GPU memory for non-zero pixels. It was able to reproduce a reddit page I had closed on another user account a few minutes ago, pixel perfect:

Of course, it doesn’t always work perfectly, sometimes the images are rearranged. I think it has something to do with the page size of memory on a GPU:

This is a serious problem. It breaks the operating system’s user boundaries by allowing non-root users to spy on each other. Additionally, it doesn’t need to be specifically exploited to harm users – it can happen purely by accident. Anyone using a shared computer could be exposing anything displayed on their screen to other users of the computer.

It’s a fairly easy bug to fix. A patch to the GPU drivers could ensure that buffers are always erased before giving them to the application. It’s what an operating system does with the CPU RAM, and it makes sense to use the same rules with a GPU. Additionally, Google Chrome could erase their GPU resources before quitting

I submitted this bug to both Nvidia and Google two years ago. Nvidia acknowledged the problem, but as of January 2016 it has not been fixed. Google marked the bug as won’t fix because google chrome incognito mode is apparently not designed to protect you against other users on the same computer (despite nearly everyone using it for that exact purpose).

Tesla Model S can now park itself

Well, authorities in Hong Kong aren’t going to like this one bit. Back in November, they told Tesla to remotely disable its semiautonomous driving technology until they can confirm that the features, released in mid October, are safe. They were concerned (sort of understandably) by widely reported hijinks by drivers who were using Tesla’s new Autopilot software to shave and sit in the backseat of the car, among other things.

Tesla complied. That doesn’t mean the company isn’t moving forward at full speed, though. Today, it released version 7.1 of its software for the Model S and X that includes a “Summon” feature that enables the car to drive itself without anyone inside.

More specifically, using their key fob, Tesla owners can now direct their cars to park themselves in a spot within 39 feet, and to drive themselves into and out of their parking garages.

In a nod to safety concerns, the company has also now restricted its Autosteer technology on residential roads and roads without a center divider. When Autosteer is engaged on a restricted road, Model S’s speed will be limited to the speed limit of the road, plus an additional 5 mph.

The site Electrek was first to publish the news. We’ve since reached out to Tesla for more information and will update this post as soon as we can.

Back at an October press briefing, when Tesla’s last software update enabled cars to steer, change lanes, and park on their own, Tesla CEO Elon Musk had said he envisioned fully driverless cars.

He said then that while its still “important to exercise great caution at this early stage,” in the long term, he added, “people will not need hands on the wheel — and eventually there won’t be wheels and pedals.”

For now, the wheels and pedals remain. For the beginning and end of the drive, however, the humans are now optional.

You can check out video of the Summon feature in action here, courtesy of an undoubtedly excited Tesla owner:

Homestead High School Newspaper, May 22, 1977

Running costs for running a web app

Domain Registration

Hover handles domain registration for cushionapp.com.

$15/year

Email Hosting

Google Apps hosts email for the team as well as spreadsheets for research and expense tracking.

$50/year

Website CMS

Siteleaf makes it possible to easily update the marketing website without needing to deploy the app.

$5/month

Website Hosting

The marketing website is hosted on GitHub Pages, which is free, static, and version-controlled.

free

Private SCM

GitHub hosts the source code of the app and GitHub Issues is used to track bugs, features, and milestones.

$7/month

Web App Server

The web app currently runs on Heroku, which is very easy to set up and maintain, but very expensive.

$34.50/month

Web App Database

The web app uses a PostgreSQL database running on Heroku, which includes automatic backups.

$50/month

SSL Certificate

The SSL certificate encrypts requests between the app and the API.

$20/month

SSL Management

ExpeditedSSL installed the SSL certificate and automatically renews it each year. The service is a set-it-and-forget-it.

$10/month

Log Management

Papertrail pulls in all the server logs from Cushion, so I can easily query them when I need to dig deeper on an issue.

$7/mo

Exception Tracking

Sentry alerts me of any uncaught exceptions in Cushion and provides all the details I need to debug them.

free

Continuous Delivery

Codeship handles testing, compiling, and deployment of Cushion. It’s incredibly flexible and easy to use.

free

Asset Hosting

The marketing website, javascript files, stylesheets, and images are all stored in AWS S3, which literally costs pennies a month.

$0.42/month

Asset CDN

The asset files are served by AWS CloudFront, a content delivery service that greatly improves transfer speeds.

$0.47/month

Email Marketing

The first newsletter update email was sent using Campaign Monitor.

$15.07

Chat / Notifications

Slack is a great for chat, but also perfect for centralizing notifications from other services, like Stripe and Intercom.

$80/year

Email Marketing

The newsletter update emails are sent using Mailchimp. It’s easy and powerful.

$50/month

Email Delivery

Cushion uses Mandrill to send triggered emails, like password resets or failed payment follow-ups.

free

Metrics

Hosted Graphite serves the dashboard for StatsD server metrics and user activity.

$19/month

Metrics

Librato serves the dashboard for server metrics and user activity, pulling in data from logs and StatsD.

$19/month

Support

Intercom is essential to communicating with Cushion users, providing customer support, user analytics, and in-app messaging.

$175/month

Code Review

Code Climate reviews the app code, grades it, and highlights areas that could be improved. This isn’t a necessary service, but useful for a clean codebase.

$49/month

Web Worker Server

The web workers run background jobs, like generating downloadable backups and requesting 3rd party services.

$74.40/month

Redis Database

The Redis database is used to queue and manage background jobs. Redis Cloud is a one-click Heroku add-on, but very expensive.

$10/month

Webhooks

Zapier automates several workflows on the marketing side of Cushion.

$15/month

Revenue Analytics

Baremetrics integrates with Stripe to analyze revenue and monitor payment activity.

$29/month

Scheduler

The scheduler spawns background jobs every 10 minutes to import new clients, projects, and invoices from Harvest for the auto-import integration.

$1/mo

Website Fonts

The marketing website’s fonts are self-hosted and licensed from MyFonts. The header font is Effra Bold by Dalton Maag and the body font is FF Tisa Pro by Mitja Miklavčič.

$300.51

Web App Fonts

The web app fonts are self-hosted and licensed from Just Another Foundry. The fonts include several styles from the Facit typeface by Tim Ahrens and Shoko Mugikura of Just Another Foundry.

$500.94

Web App Profiler

Skylight profiles server requests and pinpoints which ones are the slowest and which are the heaviest.

$20/month

Flickity JS library

Flickity is *the* responsive and touch-enabled carousel library by David DeSandro. We use it on the marketing page to cycle through user testimonials.

$25

Wildcard SSL Certificate

The wildcard SSL certificate secures all of Cushion’s subdomains. Namecheap came recommended, but there are plenty of other certificate resellers.

$99/year

Stickers

To spread the word in person, we ordered stickers from Sticker Mule. The proof was approved same-day and the stickers arrived 5 days later.

$59

Advertising

We tried Google Adwords for advertising, but quickly realized that we’re better off spreading the word in other ways while our budget is low.

$10/day

Design Process Hosting

$20/year

Payment Processor

Stripe handles payment processing and subscriptions.

2.9% + 30¢/charge

Viskell: Visual programming meets Haskell

README.md

Viskell is an experimental visual programming environment for a typed (Haskell-like) functional programming language. This project is exploring the possibilities and challenges of interactive visual programming in combination with the strengths and weaknesses of functional languages.

While many visual programming languages/environments exist, they are often restricted to some application domain or a specific target audience. The potential of advanced type systems and higher level abstractions in visual programming has barely been explored yet.

Background information

Short project overview presentation as given on the Dutch Functional Programming Day of Jan 8, 2016.

Goals and focus points

- Creating readable and compact visualisations for functional language constructs.

- Immediate feedback on every program modification, avoiding the slow edit-compile-debug cycle.

- Experimenting with a multi-touch focused user interface, supporting multiple independently acting hands.

- Type-guided development: program fragments show their types, and type error are locally visualised.

- Raising the level of abstraction (good support for higher order functions and other common Haskell abstractions).

- Addressing the scalability issues common to creating large visual programs.

Status

Viskell is not yet usable for anything practical, however suggestions from curious souls are very welcome. While being nowhere near a complete programming language, most basic features have an initial implementation. Every aspect of the design and implementation is still work in progress, but ready for demonstration purposes and giving an impression of its potential.

Building Viskell

To build an executable .jar file that includes dependencies, check out this repository, then run

mvn package

Java 8, GHC and QuickCheck are required. Importing as a Maven project into any Java IDE should also work.

You can also download a pre-built binary jar archive.

YouTube change served lower-quality video to Firefox 43 for 2 weeks

In 2016, Intel's Entire Supply Chain Will Be Conflict-Free

Seven years ago, if you bought a new iPhone or a laptop, you were probably also inadvertently supporting warlords and mass rapists in the Democratic Republic of Congo. The country has some of the world's largest deposits of many of the tiny bits of metal, like tin and tungsten, that make up electronics, and they often came from mines whose profits were used to fund the country's ongoing, devastating civil war. Luckily, that's starting to change.

This year, Intel expects its entire supply chain to be conflict-free. It's taken time: the company first set the goal in 2009, and with a massive list of suppliers, it was an overwhelming challenge at first. "We said, we don't want to support conflict, period," says Carolyn Duran, a director at Intel who oversees supply chain sustainability. "How to do that? Nothing was determined."

Phoenix-Metals_IncomingBag-TagMaterial

It started with its own factories, and worked with a handful of other electronic manufacturers to figure out a way to track materials. Metals mined in Africa might first end up in China or Russia, and before companies like Intel started asking questions, it was hard—or impossible—to say where the metals had originated or whether the proceeds had ended up in the hands of warlords.

We said, we don't want to support conflict, period.

Now, nonprofits work with the government to audit mines, and when a mine gets a "green" or good rating, the material that's shipped out ends up in labeled bags that can be tracked to smelting plants around the world. While it isn't a foolproof process, after auditing the mines themselves, Intel believes it works.

"Without owning the mines ourselves we can't be sure 100%, all the time, every day, but if we waited for that we'd never be sourcing from the region at all, and that's not what our intent was," says Duran. "We want to maintain a presence in the region, source responsibly, and help the people on the ground."

Since Intel and other manufacturers began the program, the profits from mines have started flowing to miners themselves rather than to war. In the last study of three of the major materials—tungsten, tantalum, and tin—a nonprofit called the Enough Project found that the amount of money going to conflict had dropped 65%, and it continues to fall.

For tantalum, a blue-gray mineral used in most electronics, almost the entire supply chain is now conflict-free. "There are literally a handful of smelters that aren't there [in terms of verifying that they get material only from conflict-free mines]," Duran says. "And once you hit that inflection point, it's better for the smelters to be in than out. They're actually the outliers now if they're the one or two in the world that aren't going in, it becomes a problem for them from a business perspective."

085-Kigali-CodemibuMine-TunstenOre2

There are still challenges. Gold, for example, comes out of the ground in chunks that are more pure, which makes it easier to smuggle, since you can just slip a small amount in your pocket and then change it for money. For now, that means Intel has to buy gold from other parts of the world, or recycled gold, in order to know that the materials are responsibly sourced. But it's also still working on a process to help the Democratic Republic of Congo track its own supply. Just stopping buying from the country isn't that great a solution either: Duran points out that mining is one of the country's only legitimate sources of income, and an important step in development.

Making sure that every supplier meets standards is also an ongoing challenge—if Intel gets a new supplier, or buys a new company, the process starts again. But the company is now nearly at a point where it can say everything it makes is conflict-free.

We had more industries working together and that gave us more traction than Intel working on its own.

Two years ago, it took the first major step, and announced that its microprocessors were conflict-free, something that helped inspire other manufacturers.

"That helped other companies say 'Hey, yeah, this can be done,'" says Duran. "We had more industries working together and that gave us more traction than Intel working on its own. Some of these smelters are very small parts of our supply chain. But when you have 10 or 20 companies, or two or three industries all setting those same expectations, then it becomes a real solid business case for those smelters and those suppliers to come along and join with us."

The company wants to help the whole global supply chain for these materials—not just its own—become conflict-free. "If every smelter in the world stood up and demonstrated they were sourcing responsibly, there would be no path for the illegitimate materials to go," she says. "You can put up the walls around our own supply chain and say as long as ours is good we're good, but that's not really fixing the fundamental problem. It takes all of us to really fix the problem on the ground."

Don't Configure Applications

Warning

This blog post is a rant. A very. opinionated. long. and angry. rant.

Table of Contents

- What’s in an Application?

- The way we think about Apps is wrong

- Applications are about experience

- How do we solve this?

- Conclusion

- Related articles/stuff

There seems to be a very interesting trend re-emerging in software development lately, influenced by Node’s philosophy, perhaps, where to use anything at all you first need to install a dozen of “dependencies,” spend the next 10 hours configuring it, pray to whatever gods (or beings) you believe in—even if you don’t. And then, if you’re very lucky and the stars are properly aligned in the sky, you’ll be able to see “Hello, world” output on the screen.

Apparently, more configuration always means more good, as evidenced by new, popular tools such as WebPack and Babel.js’s 6th version. Perhaps this also explains why Java was such a popular platform back in the days.

Hypothesis The popularity of a tool is proportional to the amount of time it makes their users waste.

What’s in an Application?

Before we proceed with our very scientific analysis of why programming tools suck completely (or well, most applications, really), let us revisit some of the terminology:

Library, a usually not-so-opinionated solution for a particular problem. Libraries do things, and they allow you to configure how things get done. They also allow you to combine stuff to do more things. Of course, you pay the price of having to configure, program, and combine things. And they won’t help you get any of those three done.

Framework, an opinionated solution for a particular problem. Frameworks do things in a very specific way, which the author considered to be good enough for the particular problem they solve. Frameworks can’t be combined. They can’t be configured. The only thing you can do is program on top of them. A framework helps you very little with programming on top of them, unless you stick close to whatever people have already done before—but then why are you doing those same things again?

Application, an opinionated, properly packaged, usable-out-of-the-box solution for a particular problem. Applications do things in one way, and one way only. You can’t change that. You can’t combine them. You can’t configure them. You can’t program on top of them. Applications just get the job done. They’re like the coffee machine on the office: you press the button, delicious coffee magically appears in your cup.

Given these definitions, we can only conclude that neither WebPack nor Babel fit the definition of an Application, they hardly fit the definition of Framework either, so that only leaves us with Library.

This is hardly surprising considering that these tools come from the same community that defines “transpiler” in the same way “compiler” is defined in literature, then says the words are not synonymous.

Hypothesis Programmers don’t know the meaning of words.

The way we think about Apps is wrong

The title says it all. My job here is done. Thank you for reading.

Or maybe I can be a little more helpful. Let’s see…

Applications are about experience

Let’s go back to the coffee machine analogy for a second.

These are very neat machines. They have one button that says “Get Coffee”. You press it, things happen, you get your coffee.

You are happy and gay.

But… what if you want your coffee in a different way? The coffee machine could add more buttons. Maybe a button where you select if your coffee will have sugar or not. Maybe a button where you select the amount of milk you want to put in your coffee. Maybe a button where you select if you want cream or not. Maybe a button where you can get tea instead of coffee! Maybe…

At this point people have to do so many things to even get something to drink that it feels just as overwhelming as brewing coffee yourself. Not only that, you now need a manual just to operate the machine.

Sadness and frustration fill what seems to be a way too tiny body for the amount of negative feelings this evokes.

If you compare the experience of using both coffee machines, even if the former doesn’t give you the exact kind of coffee you want, you are still far better off.

Hypothesis Humans are bad with choices, and they’d rather not make them.

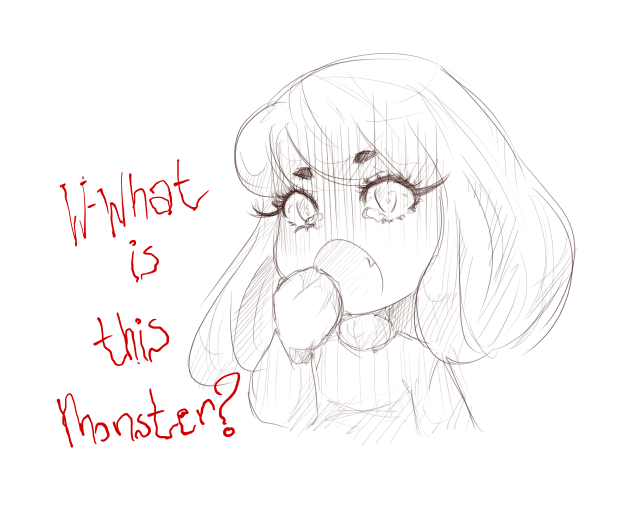

Even though this is common knowledge, computer applications still insist in being the latter. We can compare how it feels to use Babel v5 against how it feels to use Babel v6.

This is Babel v5:

Things with Babel v5 Just Work™

Things with Babel v5 Just Work™

And this is Babel v6:

W-What is this even supposed to mean?

W-What is this even supposed to mean?

So, as you can see, not only does Babel v6 not work at all, it still leads to cryptic errors that give you no insight into how to solve the problem.

Hypothesis Programmers don’t know how to help people fix things.

What do you do? Well, you ask around the Internets why something that should work is not working. You spend time doing that. You have to read the documentation of a thing that just says in its --help command "Usage: babel [options] <files ...>".

Once you get around to reading the documentation, you’ll discover that, even though you should have installed an ES6→ES5 compiler by running npm install babel-cli, to get the compiler to work, you have to actually install the compiler:

I have no words

I have no words

Hypothesis Programmers are just terrible with logic.

So, after reading the two links provided in that note (one of which tells you absolutely nothing about how to solve the problem you just saw, and only serves to make you more confused and frustrated), you install 200MB worth of data just to compile arrow functions and array spreads so they actually work in your environment. You also need to create some kind of text file that you have no idea where to, which format, or how it gets used, because nothing anywhere in the linked pages (or any page in the documentation) says a thing about it.

Did I mention that we’re supposed to just accept all of this as “that’s the way things are” because goddesses forbid you complain about it? Okay, cool.

Things apparently work maybe who knows

Things apparently work maybe who knows

So, what was so wrong with the previous Babel approach that they thought this was a good idea? Well, nothing really. They could have just as easily added a way to configure the program if you wanted to opt-out of something, but instead they decided to burden everyone with making the choice they had already made by installing Babel in the first place.

How do we solve this?

So, how do you create applications that don’t suck, and make your users want to never have known what a computer is? Well, the Human Interface Guidelines from ElementaryOS are a very great place to start. If you copy everything there, even if you don’t design an ElementaryOS application, your application will likely be actually usable by human beings.

I can’t stress the human part of “human-computer interfaces” enough. Please, think about humans when you’re designing something that you want them to use.

Hypothesis Programmers employ robots in their HCI tests.

(T-They do tests to at least figure out if their things are usable, right…?)

1. Convention over configuration

The ideal application is one that works out of the box. No configuration needed. No way of wasting time. Sometimes certain pieces of data are necessary to get the application get up and running. What do you do?

Rule #1: If I need to configure your application, you’re doing it wrong.

“Uh, ask the user to provide that information?”

That’s the one thing you do not do. Most likely this information is already available for you:

“I need the user’s github repository so I can present a list of issues whenever they—” parse it from Git or whatever other thing you can (

package.jsonalready has that information!);“I need the user’s name so I can associate it with—” ask the OS for that information.

“I need the directory where people put their dependencies so—” come up with a convention for it,

node_modulesin Node is a great one. Use it.“I need people to provide a tool to open .xyz f—” associate the extension with external tools, suggest to download and install said tool whenever you see a

.xyzfile.“But I—” GET THAT INFORMATION FROM SOMEWHERE ELSE.

Okay, sometimes there are pieces of information that aren’t already available somewhere in the computer. “For those cases I just ask the user to provide it… right?” That depends, is it absolutely required that the user provide such information in order to run the application at all? If so, you’re probably doing something wrong. Try packaging all of the things that are required to run your application together, instead.

“But at some point in time people will need a different configuration profile, and—” sure. Give them a tool to configure that if and when they absolutely need to, and guide them through choosing the best settings for their use case. Just don’t use configuration files. Don’t require your users to edit configuration files by hand.

2. Hold your user’s hand through using your app

Ideally, an application should work by just running it. Sometimes, however, that’s not really possible. Consider the nvm application, for example. It’s a tool to manage Node versions, so how would it know what version you want to use if you don’t provide it?

Rule #2: If you really need to, hold your user’s hands through using your app.

If you type nvm in the command line, you’ll be presented with an overwhelming amount of information:

This is seriously too much. It doesn’t even fit in one screen

This is seriously too much. It doesn’t even fit in one screen

Worse than that, this text tells you nothing about how you can start using the application. But there’s a nvm use <version> in that list, and that’s exactly what you want, right? You just want to use a particular version of Node. So you try it:

Uh… how do I use it, then?

Uh… how do I use it, then?

It says “version 5.0 is not yet installed”, which is not a very helpful thing to do. You’re there reading this as “Hey, there’s a problem,” and waiting for it to tell you something besides the obvious. You shout back “Okay, I know that, but how do I fix it?” Nothing happens. So what do you do? You read more things.

Back to the first wall of text, there does seem to be something like nvm install <version> in the list. It at least seems to fit the cryptic error description above, so maybe they are related? You don’t know, the text doesn’t tell you in which order you have to run things (why separate things in steps at all if you need to run all of them anyway?!).

“Okay, lemme try this one.”

Wow, am I supposed to just run

Wow, am I supposed to just run install?

You ponder a bit about why the application decided that it was a good idea to include two commands that do the same thing, but only one which works. Nevertheless, you settle for the fact that things (at least apparently) work now.

And then you open a new shell to run a different application, so of course that application also depends on Node, and:

But… it was working just fine a couple of seconds ago!

But… it was working just fine a couple of seconds ago!

You stare blankly at your screen trying to understand what just happened. Node was working just fine, and then it wasn’t. Maybe nvm is just terrible and breaks randomly? You decide to try node --version in the other shell, which to your surprise gives you what you expect: v5.0.0. “Am I just supposed to run nvm install <version> in every shell I open!?”

You decide to run nvm install <version> again just to test your theory:

It works. Really?

It works. Really?

You’re flabbergasted. Never in your life you would have thought that people would try to make it so hard for one to use things on their computers. Then you remember your previous experience with Babel. “Okay, maybe they would,” you conclude, with a heavy sigh.

But there must be something else, right? They can’t just expect you to run nvm install <version> all the time (actually nvm run <version>, but you don’t know that, it didn’t seem to work for you when you tried).

You go back to the first wall of text, searching for anything that would hint at not having to do this work all the time. nvm alias default <version> catches your attention. It does mention “default. node. shell”, after all. Those are, like, keywords, right? You decide to give it a try:

Welp, why would you require all this?

Welp, why would you require all this?

“Oh goddess, finally!” you exclaim, exasperated. You feel as if a major weight had been lifted from your shoulder, but you’re too frustrated with how bad computers are to think properly about this. “Now I can actually move on and get some work done,” you conclude, grumbling.

Could this have been different? Sure, let’s look at another quite possible interaction between the aforementioned human and nvm. Let’s assume that nvm decided that their goal is to help people manage their Node versions. With this goal in mind, nvm has traced a very common use case for new (and long-term) users of the application:

- You download the

nvmapplication because you wantnvmto install and configure different Node versions for you. - You care about having a default node version, after all, since nvm is going to be managing that.

- You care about running node with the least amount of commands possible. Ideally you’d just write

nodeand things would work.

You start with running nvm. The application notices it can’t actually get any work done for you, so it suggests some common actions:

A more helpful way of telling your users they need to do something

A more helpful way of telling your users they need to do something

You note that it’s still necessary to configure nvm for it to work. An interactive screen where the application suggests installing the stable version for you (which is a good default) would allow you to be done with this configuration biz faster. Nonetheless, this is already a huge improvement over the previous screen. It at least tells you what you have to do to get it working.

One command to rule them all

One command to rule them all

Oops, you didn’t have any version of Node installed before running use. But the command acknowledged so and installed the version for you. It was the only sensible thing to do, there was nothing else that could be done there.

A quick reminder When your application sees an error, and there’s only one way to fix it, fix it.

After installing it, you’re asked if you’d like to make the version we just installed your default Node version. It dutifully notes that you can change it at any point in time, if you want to. Running npm use <version> is a common thing to do—it’s the whole point of the application. Given this, it’s only reasonable to give you an option of reducing that work.

“B-But this doesn’t apply to every program, right? How do you do this for a programming language’s implementation?” An application developer cries. Well, here’s an example from Amber Smalltalk

Interactive tutorials for learning PLs are a really good idea

Interactive tutorials for learning PLs are a really good idea

3. Things will fail, tell me how to fix it!

The best way of handling unexpected actions in your application is just fixing it for the user and proceeding to do what the user wants—if you can solve it in a sensible manner. This is not always possible, however.

Rule #3: If anything goes wrong, you must help your users fix those problems in the best way possible.

Consider the following example:

v8 has one of the worst error messages I’ve ever seen

v8 has one of the worst error messages I’ve ever seen

So, JSON.parse() says “Sorry, I can’t parse this, there’s a b here”. A b WHERE?! What should have been there? Do you realise there’s like two b’s in this code? Which one is wrong? Are both wrong? How do I fix this?

As you can see, v8’s error messages, even though they have improved a little bit, remain largely useless. This, in particular, is one of those errors that a little bit more of work on your JSON parser could actually give the user a very useful error message:

A few simple changes to the parser and you can get this

A few simple changes to the parser and you can get this

Now, not only the error message helps you understand what is wrong, it also tells you how to fix it. Telling the user how to fix something is desirable, but not always possible. Still, one has to at least make some effort to tell the user what’s wrong, in a way they can understand, instead just screaming “I’m sorry Dave, I can’t do that.”

Speaking of usable error messages, Elm has a very good blog post on the subject, and also one of the most helpful error messages, as far as static compilers go:

The above image is shamelessly stolen from Elm’s blog post

The above image is shamelessly stolen from Elm’s blog post

4. Stop thinking about documentation!

Documentation is good for libraries and frameworks. Documentation is terrible for applications. If you think that your application really requires documentation, it’s too complicated, and it’s going to make your users frustrated.

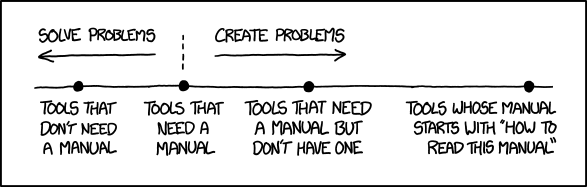

This is true because it’s on XKCD:

I rest my case

I rest my case

Instead of documentation, just have your application do its job by simply running it. No configuration. No nothing. Just let the user get the stuff done.

Rule #4: Don’t require users to read manuals before using your thing.

Apple gets it, you can just copy them:

Conclusion

Computers suck.

Applications suck.

We can make them better.

But y’all aren’t even trying for fuck’s sake.

(I’m guilty of this too)

Related articles/stuff

- Software You Can Use

- Glyph

- JavaScript Fatigue

- Eric Clemmons

- The Future of Programming

- Bret Victor

- A Whole New World

- Gary Bernhardt

- Self and Self: Whys and Wherefores

- David Ungar

Footnotes

Aaron Swartz died three years ago today

The New Press has put out abook collecting some of his writing. I contributed a short piece, as did some other people who knew him; since my contract allows me to, and since no-one conceivably wants to buy the book to see what I have to say, I’m putting it below the fold.

People who didn’t know Aaron remember him for his tireless work for a variety of public causes. They usually don’t realize that this work went together with a myriad of private kindnesses. I got to know Aaron as an extraordinarily intelligent commentator on Crooked Timber, an academic blog that I contribute to. At first, I didn’t know about the other great things that he had done; he didn’t talk about them unless he was pressed. He just wanted to get involved in conversations with other people who were interested in the same topics of political inquiry and social justice as he was.

He also wanted to help – when we had major technical difficulties because our audience was outpacing the capacities of the server space we had leased, he suggested, without any fuss, that he would be very happy to take over our technical responsibilities and provide us all the facilities we needed. He privately helped many other people, in equally unfussy ways. Rick Perlstein, the political historian of the rise of the right, is now famous. Before he was well known, Aaron came across his work, realized that he didn’t have a website, and offered to make one for him. Rick was a bit nonplussed to receive so generous an offer from a complete stranger, but quickly realized that Aaron was for real. They became good friends.

We asked Aaron to guestblog for us for seminars, but also we just published his work when he had something to say, and asked us if we were interested (we said yes, and for good reason). He brought many worlds together. His activism went hand-in-hand with a deep commitment to the intellect and to figuring out the world through argument. This could discomfit other activists, since it meant that he often changed his mind. He had the profound intellectual curiosity of a first rate scholar without the self-importance that usually accompanies it. If he could be accused of arrogance (and some people did so accuse him) it was a curiously egoless form. He simply expected other people to live up to the same exacting standards that he imposed upon himself. But he could also take a joke. When the New York Times ran a story on him with an accompanying photo, which portrayed him brooding and backlit behind the screen of his Macbook, I teased him about it, and he was clearly delighted to be teased.

It’s hard to face up to what we’ve lost. He wasn’t just an activist, or a programmer, or an intellectual. He was a builder of bridges between many different people from many different worlds. I only began to realize how many people he corresponded with after he had died. When I write now, it is often in an imaginary dialogue with him, where I imagine his impatience with this or that plodding sentence which is too far removed from the real concerns of real people. That imaginary dialogue is no substitute for the real thing. He was smarter than I am, and always capable of surprising me. I miss him very much.

2015 Was the “Year of the Robotics Startups”

2015 was an insane year for robotics companies; they raised $922.7M in VC funding -- 170% more than in 2014. I'm almost certain that it exceeds $1 Billion, especially if you account for funding events in Asia (opaque to me) or if you take into account companies at the periphery of robotics (sensing, software, 3D printing, etc). Similar to previous years, a large portion of the funding went to medical companies and drone companies, but we also saw a lot of late-stage consumer robot financings this year (such as Jibo and Sphero) -- but comparatively few agricultural or service robots. Still, I think it's safe to say: 2015 was the year of the robotics startup!

===> 2015: $922.7 Million <===

Total: > $922.7 Million†*

* I only included funding events with public sources, and I opted to leave off any funding that was under $1 Million. I also ignored crowdfunding, since these arrangements do not typically involve equity transfer. Furthermore, it is harder and harder to determine "what constitutes a robotics company." I used my own dictatorial judgment; feel free to disagree in the comments. For example, I generally don't include 3D printers in my robotics tally, so that meant I didn't count Carbon3D's massive $100M round. I also don't count corporate "spinouts" or "joint ventures" such as Toyata's $1B Robotics R&D Efforts in Silicon Valley or Softbank's $236M Robotics Holding Group. Finally, I almost always miss a few funding rounds; let me know in the comments (with a credible source!).

† Many thanks to Frank Tobe and Tim Smith for providing me some lists of their own. In fact, Frank does such a good job that I may pass the torch off to him in future years. There's little sense in duplicating our efforts... plus then I can spend the 10(ish) hours it takes to prepare this list on something more personally fulfilling -- like hacking on robots or writing more techy Hizook articles. ;)

Auris Surgical ($150 Million)

Auris is developing a microsurgical robot for eye surgeries, such as cataract removal. They raised $150 Million this year.

DJI ($75 Million)

DJI is the leader in the drone space. They were predicting $1B in sales in 2015, and they raised $75 Million this year -- those are insane numbers (large revenue and small funding).

3D Robotics ($64 Million)